|

I received a pile of scrap metal from a friend. Wanted to build a railing but had to meet city code with the scrap metal given to me; was challenging because no single remnant was long enough to meet the height requirement. I came up with this offset-45 design as a solution. Happy how it turned out!

Fire up those chemokines! Created this sequence (concept to completion) in 5 days given only an audio clip & general script guidelines.

Rendering has always been a black-art with render engines of the past. With Mental Ray, combining deformation motion blur with ambient occlusion was a recipe for disaster. On the GPU, it's no problem at all for Redshift.

Here is a Redshift vs Mental Ray sequence test. Cheats and workarounds for computationally expensive processing like global illumination, motion blur, depth of field, and refractions/reflections are a thing of the past!

Took a chance buying a new, but cosmetically damaged Tesla k20c off of Ebay. Had a small ding on one corner so I got it for pennies on the dollar. Works perfectly! Being a headless GPU compute card, I can change the motherboard bios to TCC (Tesla Compute Cluster) mode letting the hardware run without any overhead from MS Windows. In TCC mode my test renders were 50% faster, so it's worth the trouble to see if your headless GPU can run in TCC mode; Titans can... possibly others as well. Combined with the Quadro k5000 the nVidia drivers go into Maximus mode. Redshift screeeeeams!

Redshift GPU computing on the Reading Nook scene. This used to take hours to render with Mental Ray.

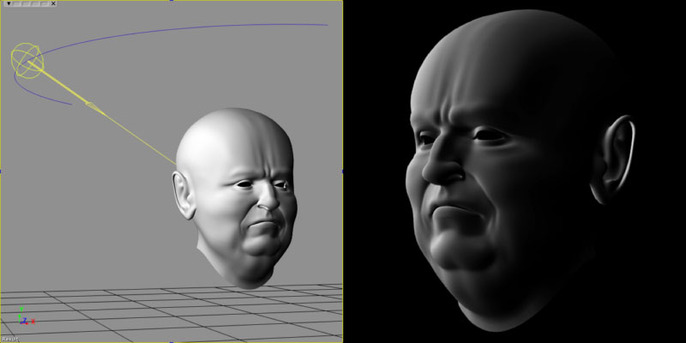

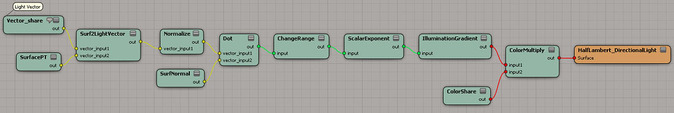

Wired up a Half-Lambertian shader. It's a nice technique for simulation slight subsurface light scattering or very rough objects where light energy would be transmitted from it's hit location to nearby matter. Nice soft fall-off made famous in this Valve paper.

I've uploaded a preset for this material. Note that this material uses a vector share node (I've commented it) to calculate shading; not a light.

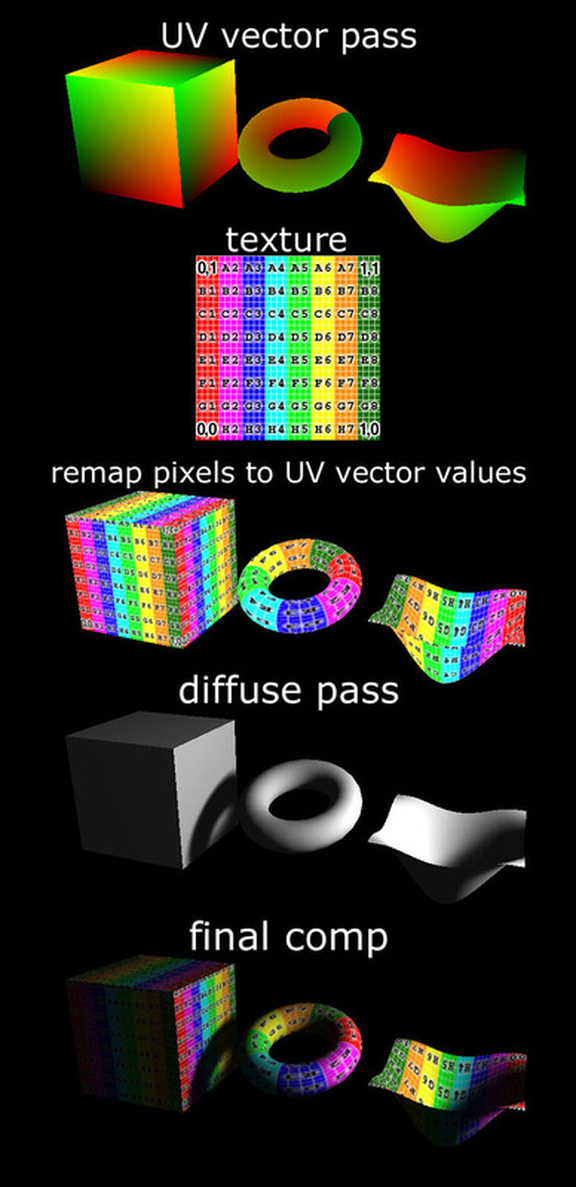

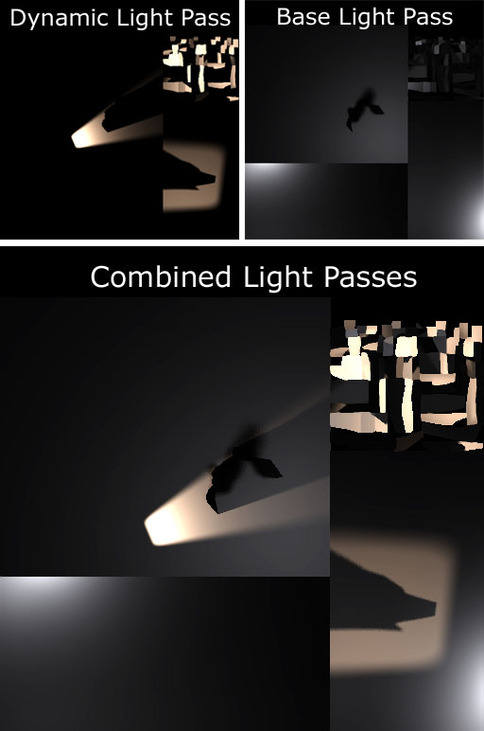

Many game engines are using deferred rendering these days as opposed to forward rendering. In addition, many movie shots have been authored with deferred techniques and then assembled and shaded in Nuke. The concept for both real-time engines and offline renderers is basically the same; to encode 3d data into 2d space (buffers) and then solve for the lighting/shading as a post process. If you are interested in lighting/shading in post, check out the Postlight tool by Andy Nicholas.

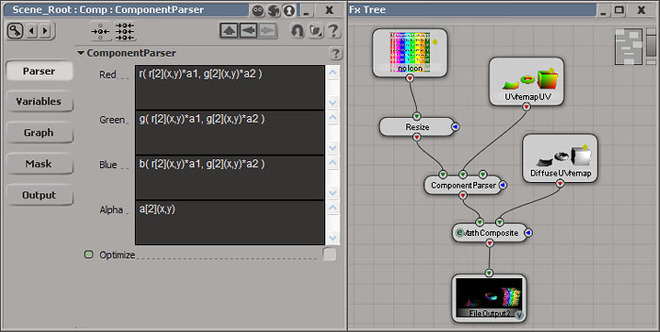

This deferred texture mapping test is a similar idea and mimics thistool by RevisionFX. For offline rendering, this additional UV vector pass can save re-rendering an image/animation by allowing me to swap textures after rendering is complete.

The Component Parser is the compositing node used to map the texture to the UV vector data. The variable a1 = horizontal pixel count of the image and variable a2 = the vertical pixel count.

Objects can be easily textured in post. Swapping textures is real-time without the need to re-render.

This is a great cheat for low cost 'dynamic' lights. If you bake lightmaps in passes, this is a very easy effect to author.

Essentially I combine two lightmaps; adding the dynamic map over the base map. For this demo, I do exactly that. In practical application, you would not want to double your lightmap textures space for a whole level. Instead, create a pass where duplicate chunks of localized geometry will hold low resolution dynamic maps (or even make a larger texture sheet for all the dynamic maps in your level, a 512x512 will do) and bake to a new set of UVs.

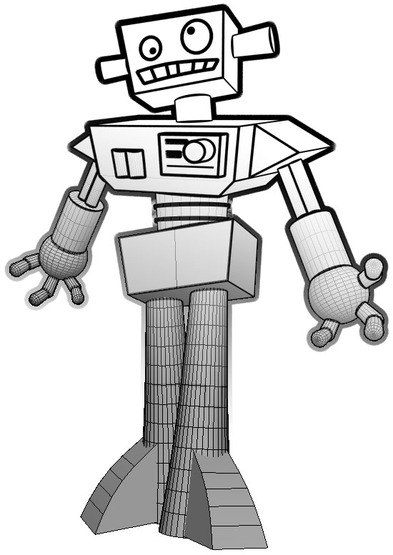

Using the toon shaders for illustrative renders. I made some line art, burned some screens, & printed the kiddos some cool custom T-shirts!

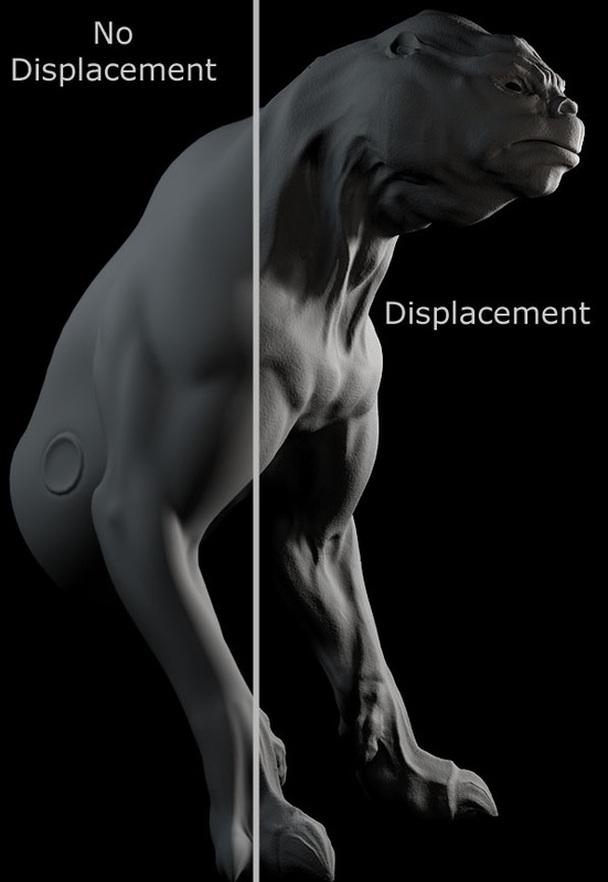

Displacement test with the 3delight renderer.

|

Derek Jenson Blog

Resume

Endorsements Contact Form My website serves to archive experiments, document projects, share techniques, and motivate further exploration & artistry in 3d space. Archives

June 2020

Categories

All

|

||||||